Exascale computing is the next milestone in the development of supercomputers. Able to process information much faster than today’s most powerful supercomputers, exascale computers will give scientists a new tool for addressing some of the biggest challenges facing our world, from climate change to understanding cancer to designing new kinds of materials.

One way scientists measure computer performance is in floating point operations per second (FLOPS). These involve simple arithmetic like addition and multiplication problems. Their performance in FLOPS has so many zeros - researchers instead use prefixes like Giga, Tera, Exa. where “Exa” means 18 zeros. That means an exascale computer can perform more than 1,000,000,000,000,000,000 FLOPS, or 1 exaFLOP. DOE is deploying the United States’ first exascale computers: Frontier at ORNL and Aurora at Argonne National Laboratory and El Capitan at Lawrence Livermore National Laboratory.

Exascale supercomputers will allow scientists to create more realistic Earth system and climate models. They will help researchers understand the nanoscience behind new materials. Exascale computers will help us build future fusion power plants. They will power new studies of the universe, from particle physics to the formation of stars. And these computers will help ensure the safety and security of the United States by supporting tasks such as the maintenance of US nuclear deterrent.

For decades, the performance maximization has been the chief concern of both the hardware architects and the software developers. Due to end of performance scaling by increasing CPUs clock frequencies (i.e Moore's Law), Industries transit from single-core to multi-core and many-core architectures. As a result, the hardware acceleration and the use of co-processors together with CPU are becoming a popular choice to gain the performance boost while keeping the power budget low. This includes both the new customized hardware for particular application domain such as Tensor Processing Unit (TPU), Vision Processing Unit (VPU) and Neural Processing Unit (NPU); and the modifications in existing platforms such as Intel Xeon Phi co-processors, general purpose GPUs and Field Programmable Gate Array (FPGA)s. Such accelerators together with main processors and memory, constitute a heterogeneous system. However, this heterogeneity has raised unprecedented difficulties posed to performance and energy optimization of modern heterogeneous HPC platforms.

The focus of maximizing the performance of HPC in terms of completing the hundreds of trillion Floating Point Operations Per Second (FLOPS) has led the supercomputers to consume an enormously high amount of energy in terms of electricity and for cooling down purposes. As a consequence, current HPC systems are already consuming Megawatts of energy. Energy efficiency is becoming an equally important design concern with performance in ICT. Current HPC systems are already consuming Megawatts of energy. For example, the world’s powerful supercomputer like Summit consumes around 13 Megawatts of power which is roughly equivalent to the power draw of roughly over 10000 households. Because of such high power consumption, future HPC systems are highly likely to be power constrained. For example, DOE aims to deploy this exascale supercomputer capable of performing 1 million trillion ( or 1018) floating-point operations per second in a power envelope of 20-30 megawatts. Initial target was to deliver a double precision exaflops of compute capability for 20 megawatts of power and other target was 2 exaflops for 29 megawatts of power when it’s running at full power. Taking into consideration the above-mentioned factors, HPE Cray designed Frontier supercomputer powered by AMD for growing accelerated computational needs and power constraints.

The Frontier supercomputer, built at the Department of Energy's Oak Ridge National Laboratory in Tennessee, has now become the world's first known supercomputer to demonstrate a processor speed of 1.1 exaFLOPS (1.1 quintillion floating point operations per second, or FLOPS). The Frontier supercomputer's exascale performance is enabled by world's most advanced pieces of technology from HPE and AMD.

Frontier supercomputer powered by AMD is the first exascale machine meaning it can process more than a quintillion calculations per second with an HPL score of 1.102 Exaflop/s. Based on the latest HPE Cray EX235a architecture and equipped with AMD EPYC 64C 2GHz processors, the system has 8,730,112 total cores and a power efficiency rating of 52.23 gigaflops/watt. It relies on gigabit ethernet for data transfer.

Exascale is the next level of computing performance. By solving calculations five times faster than today’s top supercomputers—exceeding a quintillion [ or 1018 ] calculations per second—exascale systems will enable scientists to develop new technologies for energy, medicine, and materials. The Oak Ridge Leadership Computing Facility will be home to one of America’s first exascale systems, Frontier, which will help guide researchers to new discoveries at exascale.

It's based on HPE Cray’s new EX architecture and Slingshot interconnect with optimized 3rd Gen AMD EPYC™ CPUs for HPC and AI, and AMD Instinct™ 250X accelerators. It delivers linepack (double precision floating point – FP64) compute performance of 1.1 EFLOPS (ExaFLOPS).

|

| Source |

The Frontier test and development system (TDS) secured the first place in the Green500 list, delivering 62.68 gigaflops/watt power-efficiency from a single cabinet of optimised 3rd Gen AMD EPYC processors and AMD Instinct MI250x accelerators. It could lead to breakthroughs in medicine, astronomy, and more.

The HPE/AMD system delivers 1.102 Linpack exaflops of computing power in a 21.1-megawatt power envelope, an efficiency of 52.23 gigaflops per watt. Frontier only uses about 29 megawatts at its very peak. During a test, Frontier ran at 1.1 exaflops and could go as high as 2 exaflops.

|

| Source |

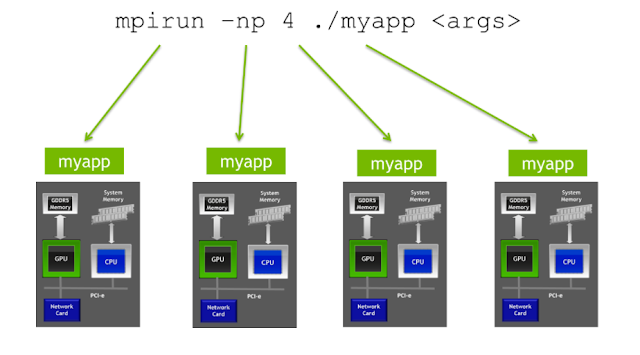

Node diagram:

|

| Source |

These are HPE Cray EX systems has 74 cabinets of this — 9,408 nodes. Each node has one CPU and four GPUs. The GPUs are the [AMD] MI250Xs. The CPUs are an AMD Epyc CPU. It’s all wired together with the high-speed Cray interconnect, called Slingshot. And it’s a water-cooled system. Recently good efforts towards using computational fluid dynamics to model the water flow in the cooling system. These are incredibly instrumented machines with liquid cooling dynamically adjust to the workloads. There’s sensors that are monitoring temperatures where even down to the individual components on the individual node-boards, so they can adjust the cooling levels up and down to make sure that the system stays at a safe temperature. It was estimated to provide over 60 gigaflops-per-watt for the single cabinet run.

This is the datacenter where they formerly had the Titan supercomputer. So they removed that supercomputer and refurbished this datacenter. That needed more power and more cooling. So they brought in 40 megawatts of power to the datacenter and have 40 megawatts of cooling available. Frontier really only uses about 29 megawatts of that at its very peak. And this Supercomputer is even a little bit quieter than Summit because they’re going to liquid-cooled with no fans and no rear doors where exchanging heat with the room. It’s 100 percent liquid cooled, and the [fan] noise generated from storage systems that are also HPE and are air-cooled.

At OLCF [Oak Ridge Leadership Computing Facility], they have the Center for Accelerated Application Readiness, we call it CAAR. Its vehicle for application readiness. That group supports eight apps for the OLCF and 12 apps for the Exascale Computing Project. Frontier was OLCF-5, the next system will be OLCF-6.

The result was confirmed in a benchmarking test called High-Performance Linpack (HPL). As impressive as that sounds, the ultimate limits of Frontier are even more staggering, with the supercomputer theoretically capable of a peak performance of 2 quintillion calculations per second. Among all these massively powerful supercomputers, only Frontier has achieved true exascale performance, at least where it counts, according to TOP500. Some of the most exciting things are the work in artificial intelligence and those workloads. Plan for research teams to develop better treatments for different diseases, how to improve efficacies of treatments, and these systems are capable of digesting just incredible amounts of data. Thousands of laboratory reports or pathology reports, can draw inferences across these reports that no human being could ever do but that a supercomputer can do. They still have Summit here, a previous Top500 number-one system, an IBM/Nvidia machine. It’s highly utilized at this point. They will at least run it for a year and overlap with Frontier so that we can make sure that Frontier is up and stable and give people time to transition their data and their applications over to the new system.

With the Linpack exaflops milestone achieved by the Frontier supercomputer at Oak Ridge National Laboratory, the United States is turning its attention to the next crop of exascale machines, some 5-10x more performant than Frontier. At least one such system is being planned for the 2025-2030 timeline, and the DOE is soliciting input from the vendor community to inform the design and procurement process. That can solve scientific problems 5 to 10 times faster – or solve more complex problems, such as those with more physics or requirements for higher fidelity – than the current state-of-the-art systems. These future systems will include associated networks and data hierarchies. A capable software stack will meet the requirements of a broad spectrum of applications and workloads, including large-scale computational science campaigns in modeling and simulation, machine intelligence, and integrated data analysis. They expect these systems to operate within a power envelope of 20–60 MW. These systems must be sufficiently resilient to hardware and software failures, in order to minimize requirements for user intervention. This could include the successor to Frontier (aka OLCF-6), the successor to Aurora (aka ALCF-5), the successor to Crossroads (aka ATS-5), the successor to El Capitan (aka ATS-6) as well as a future NERSC system (possibly NERSC-11). Note that of the “predecessor systems,” only Frontier has been installed so far. A key thrust of the DOE supercomputing strategy is the creation of an Advanced Computing Ecosystem (ACE) that enables “integration with other DOE facilities, including light source, data, materials science, and advanced manufacturing. The next generation of supercomputers will need to be capable of being integrated into an ACE environment that supports automated workflows, combining one or more of these facilities to reduce the time from experiment and observation to scientific insight.

The original CORAL contract called for three pre-exascale systems (~100-200 petaflops each) with at least two different architectures to manage risk. Only two systems – Summit at Oak Ridge and Sierra at Livermore – were completed in the intended timeframe, using nearly the same heterogeneous IBM-Nvidia architecture. CORAL-2 took a similar tack, calling for two or three exascale-class systems with at least two distinct architectures. The program is procuring two systems – Frontier and El Capitan – both based on a similar heterogenous HPE AMD+AMD architecture. The redefined Aurora – which is based on the heterogenous HPE Intel+Intel architecture – becomes the “architecturally-diverse” third system (although it technically still belongs to the first CORAL contract).

-----

Reference:

https://www.olcf.ornl.gov/wp-content/uploads/2019/05/frontier_specsheet.pdf

https://www.researchgate.net/figure/Power-consumption-by-top-10-supercomputers-over-time-based-on-the-results-published_fig2_350176475

https://www.youtube.com/watch?v=HvJGsF4t2Tc